SpeechForGood

ROLE: UI/UX Designer

TOOLS: NotebookLM, Figma

DURATION: 3 days

SpeechForGood

ROLE: UI/UX Designer

TOOLS: NotebookLM, Figma

DURATION: 3 days

SpeechForGood

ROLE: UI/UX Designer

TOOLS: NotebookLM, Figma

DURATION: 3 days

OVERVIEW

For this 3-day designathon sponsored by UCSC's Silicon Valley Campus and AImpower, I collaborated with three fellow designers to develop a data collection platform that empowers individuals who stutter to contribute speech samples on their own terms, helping build more inclusive AI speech technologies. Our design focused on creating meaningful experiences around consent and transparency. The project earned first place recognition from a distinguished panel including our M.S. program’s Executive Director Yassi Moghaddam, Vice Chair Norman Su, and Jingjin Lin from AImpower.

OVERVIEW

For this 3-day designathon sponsored by UCSC's Silicon Valley Campus and AImpower, I collaborated with three fellow designers to develop a data collection platform that empowers individuals who stutter to contribute speech samples on their own terms, helping build more inclusive AI speech technologies. Our design focused on creating meaningful experiences around consent and transparency. The project earned first place recognition from a distinguished panel including our M.S. program’s Executive Director Yassi Moghaddam, Vice Chair Norman Su, and Jingjin Lin from AImpower.

OVERVIEW

For this 3-day designathon sponsored by UCSC's Silicon Valley Campus and AImpower, I collaborated with three fellow designers to develop a data collection platform that empowers individuals who stutter to contribute speech samples on their own terms, helping build more inclusive AI speech technologies. Our design focused on creating meaningful experiences around consent and transparency. The project earned first place recognition from a distinguished panel including our M.S. program’s Executive Director Yassi Moghaddam, Vice Chair Norman Su, and Jingjin Lin from AImpower.

THE CHALLENGE

Rethinking Data Collection

Current speech recognition technologies (like Siri and Alexa) aren’t built for people with speech impairments. The fix seems simple: train the AI with more data from people who stutter. But here's the catch: why would a community that technology has repeatedly failed trust it with something as personal as their voice? How could we design something that builds trust, offers transparency, and motivates users to contribute their voice?

Within this 3-day timeframe, we split the process into 3 sections:

Day 1: Research

Day 2: Interviews + Ideation

Day 3: Design

THE CHALLENGE

Rethinking Data Collection

Current speech recognition technologies (like Siri and Alexa) aren’t built for people with speech impairments. The fix seems simple: train the AI with more data from people who stutter. But here's the catch: why would a community that technology has repeatedly failed trust it with something as personal as their voice? How could we design something that builds trust, offers transparency, and motivates users to contribute their voice?

Within this 3-day timeframe, we split the process into 3 sections:

Day 1: Research

Day 2: Interviews + Ideation

Day 3: Design

RESEARCH

Guiding Questions

What are the limitations of current speech impediment technology, according to users?

What ethical factors affect speech dataset construction?

What privacy aspects are important to PWS (Persons with Speech Impediments)?

Literature Reviews

7 peer-reviewed articles developed our foundational understanding of the current landscape in Automatic Speech Recognition (ASR) models and their effectiveness in analyzing stuttered speech.

Many also served as a guideline for the ethical considerations (such as informed consent, data privacy, and fairness) and technical considerations (such as variability of speech patterns, model training, and personalization of speech data). We used NotebookLM to efficiently analyze these articles and extract the most relevant insights.

Our analysis gave us an in-depth understanding about speech impairment symptoms (such as stuttering and its various manifestations) and insights into design features that could assist with these symptoms.

User Interviews

Given the scope of our project we opted for a quick, convenient, and cost-effective approach to individuals we knew who had some form of speech impairment. We held in-person interviews with them and asked them about their experiences with speech recognition technologies and how we could make them trust data collection processes more.

Key Takeaways

From the data we analyzed, we determined that the biggest pain points for users were:

Inaccurate processing of speech

Lack of transparency for confidentiality

Data privacy concerns

RESEARCH

Guiding Questions

What are the limitations of current speech impediment technology, according to users?

What ethical factors affect speech dataset construction?

What privacy aspects are important to PWS (Persons with Speech Impediments)?

Literature Reviews

7 peer-reviewed articles developed our foundational understanding of the current landscape in Automatic Speech Recognition (ASR) models and their effectiveness in analyzing stuttered speech.

Many also served as a guideline for the ethical considerations (such as informed consent, data privacy, and fairness) and technical considerations (such as variability of speech patterns, model training, and personalization of speech data). We used NotebookLM to efficiently analyze these articles and extract the most relevant insights.

Our analysis gave us an in-depth understanding about speech impairment symptoms (such as stuttering and its various manifestations) and insights into design features that could assist with these symptoms.

User Interviews

Given the scope of our project we opted for a quick, convenient, and cost-effective approach to individuals we knew who had some form of speech impairment. We held in-person interviews with them and asked them about their experiences with speech recognition technologies and how we could make them trust data collection processes more.

Key Takeaways

From the data we analyzed, we determined that the biggest pain points for users were:

Inaccurate processing of speech

Lack of transparency for confidentiality

Data privacy concerns

IDEATION

Rapid Brainstorming

Considering these pain points, we wrote down all the solutions we could think of on Figjam sticky notes and discussed each one. The solutions shown below are the ones we chose to pursue.

Final Proposal

After aligning and identifying common themes, we agreed on a direction: Gamifying the data collection process.

Our final proposal was an experience where users feed a cute creature recorded pieces of speech. We put emphasis on how we are using user data, explicit consent, community, and the option to retract data at anytime.

IDEATION

Rapid Brainstorming

Considering these pain points, we wrote down all the solutions we could think of on Figjam sticky notes and discussed each one. The solutions shown below are the ones we chose to pursue.

Final Proposal

After aligning and identifying common themes, we agreed on a direction: Gamifying the data collection process.

Our final proposal was an experience where users feed a cute creature recorded pieces of speech. We put emphasis on how we are using user data, explicit consent, community, and the option to retract data at anytime.

THE CHALLENGE

Rethinking Data Collection

Current speech recognition technologies (like Siri and Alexa) aren’t built for people with speech impairments. The fix seems simple: train the AI with more data from people who stutter. But here's the catch: why would a community that technology has repeatedly failed trust it with something as personal as their voice? How could we design something that builds trust, offers transparency, and motivates users to contribute their voice?

Within this 3-day timeframe, we split the process into 3 sections:

Day 1: Research

Day 2: Interviews + Ideation

Day 3: Design

THE CHALLENGE

Rethinking Data Collection

Current speech recognition technologies (like Siri and Alexa) aren’t built for people with speech impairments. The fix seems simple: train the AI with more data from people who stutter. But here's the catch: why would a community that technology has repeatedly failed trust it with something as personal as their voice? How could we design something that builds trust, offers transparency, and motivates users to contribute their voice?

Within this 3-day timeframe, we split the process into 3 sections:

Day 1: Research

Day 2: Interviews + Ideation

Day 3: Design

RESEARCH

Guiding Questions

What are the limitations of current speech impediment technology, according to users?

What ethical factors affect speech dataset construction?

What privacy aspects are important to PWS (Persons with Speech Impediments)?

Literature Reviews

7 peer-reviewed articles developed our foundational understanding of the current landscape in Automatic Speech Recognition (ASR) models and their effectiveness in analyzing stuttered speech.

Many also served as a guideline for the ethical considerations (such as informed consent, data privacy, and fairness) and technical considerations (such as variability of speech patterns, model training, and personalization of speech data). We used NotebookLM to efficiently analyze these articles and extract the most relevant insights.

Our analysis gave us an in-depth understanding about speech impairment symptoms (such as stuttering and its various manifestations) and insights into design features that could assist with these symptoms.

User Interviews

Given the scope of our project we opted for a quick, convenient, and cost-effective approach to individuals we knew who had some form of speech impairment. We held in-person interviews with them and asked them about their experiences with speech recognition technologies and how we could make them trust data collection processes more.

Key Takeaways

From the data we analyzed, we determined that the biggest pain points for users were:

Inaccurate processing of speech

Lack of transparency for confidentiality

Data privacy concerns

RESEARCH

Guiding Questions

What are the limitations of current speech impediment technology, according to users?

What ethical factors affect speech dataset construction?

What privacy aspects are important to PWS (Persons with Speech Impediments)?

Literature Reviews

7 peer-reviewed articles developed our foundational understanding of the current landscape in Automatic Speech Recognition (ASR) models and their effectiveness in analyzing stuttered speech.

Many also served as a guideline for the ethical considerations (such as informed consent, data privacy, and fairness) and technical considerations (such as variability of speech patterns, model training, and personalization of speech data). We used NotebookLM to efficiently analyze these articles and extract the most relevant insights.

Our analysis gave us an in-depth understanding about speech impairment symptoms (such as stuttering and its various manifestations) and insights into design features that could assist with these symptoms.

User Interviews

Given the scope of our project we opted for a quick, convenient, and cost-effective approach to individuals we knew who had some form of speech impairment. We held in-person interviews with them and asked them about their experiences with speech recognition technologies and how we could make them trust data collection processes more.

Key Takeaways

From the data we analyzed, we determined that the biggest pain points for users were:

Inaccurate processing of speech

Lack of transparency for confidentiality

Data privacy concerns

IDEATION

Rapid Brainstorming

Considering these pain points, we wrote down all the solutions we could think of on Figjam sticky notes and discussed each one. The solutions shown below are the ones we chose to pursue.

Final Proposal

After aligning and identifying common themes, we agreed on a direction: Gamifying the data collection process.

Our final proposal was an experience where users feed a cute creature recorded pieces of speech. We put emphasis on how we are using user data, explicit consent, community, and the option to retract data at anytime.

IDEATION

Rapid Brainstorming

Considering these pain points, we wrote down all the solutions we could think of on Figjam sticky notes and discussed each one. The solutions shown below are the ones we chose to pursue.

Final Proposal

After aligning and identifying common themes, we agreed on a direction: Gamifying the data collection process.

Our final proposal was an experience where users feed a cute creature recorded pieces of speech. We put emphasis on how we are using user data, explicit consent, community, and the option to retract data at anytime.

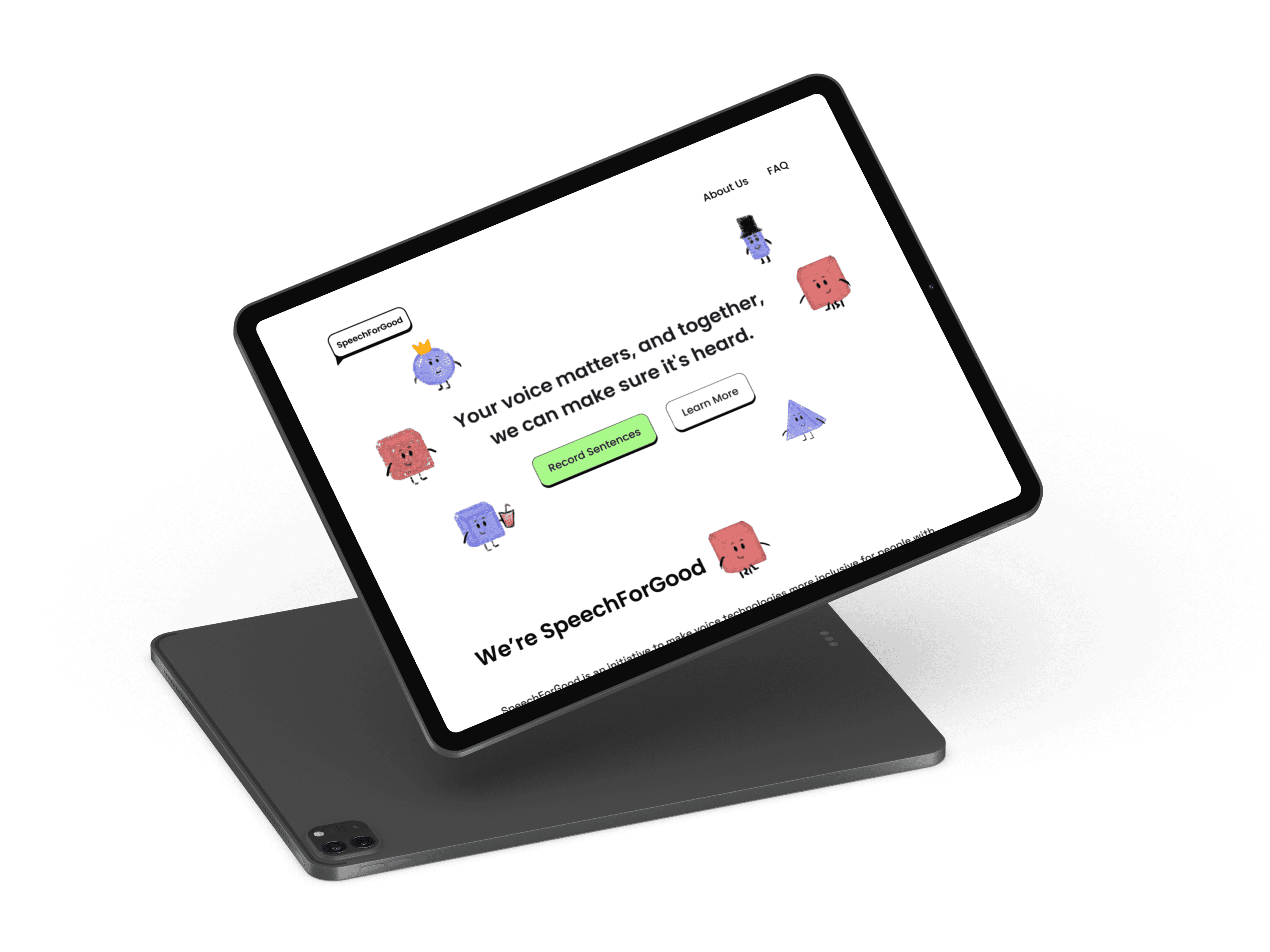

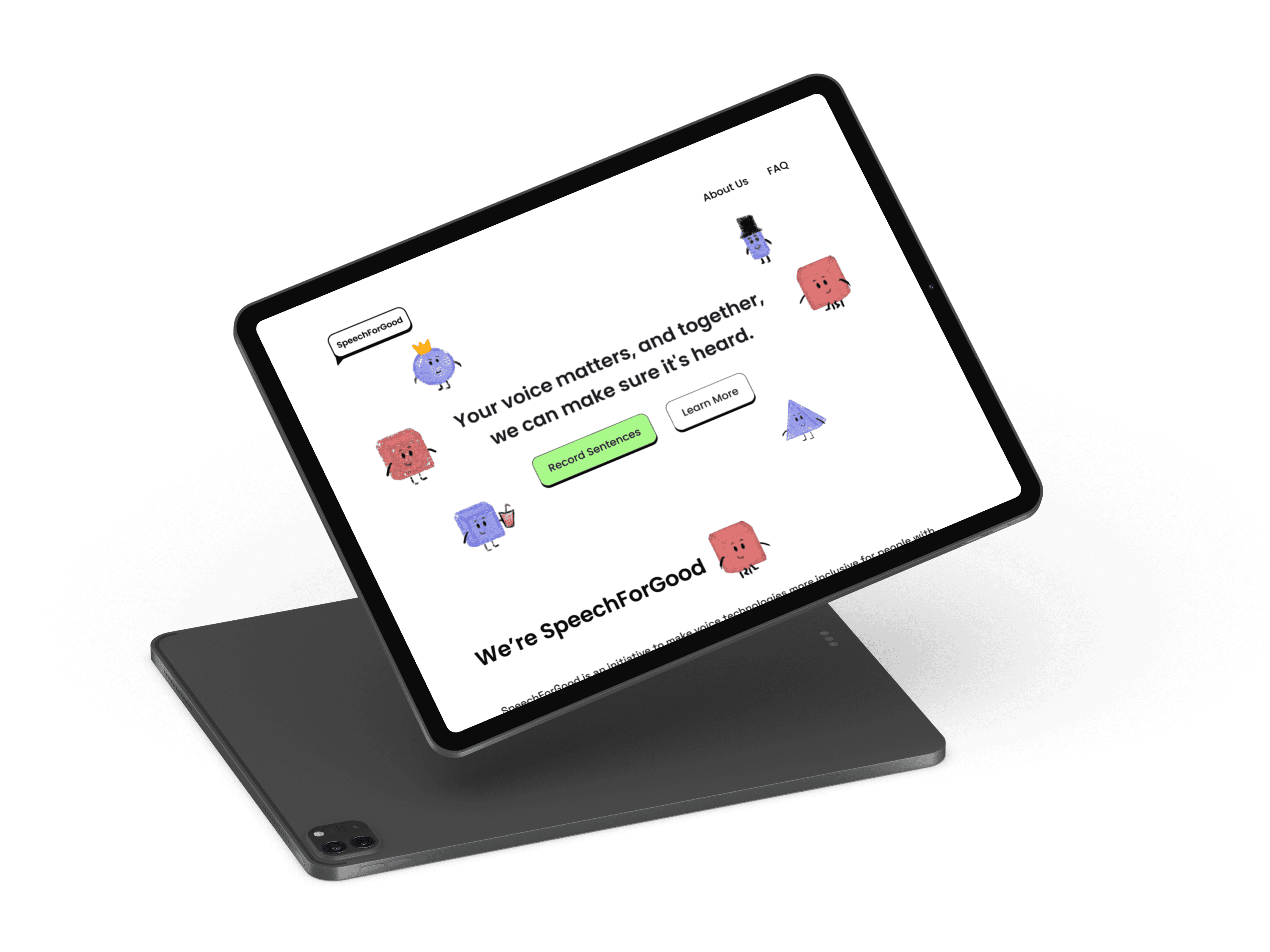

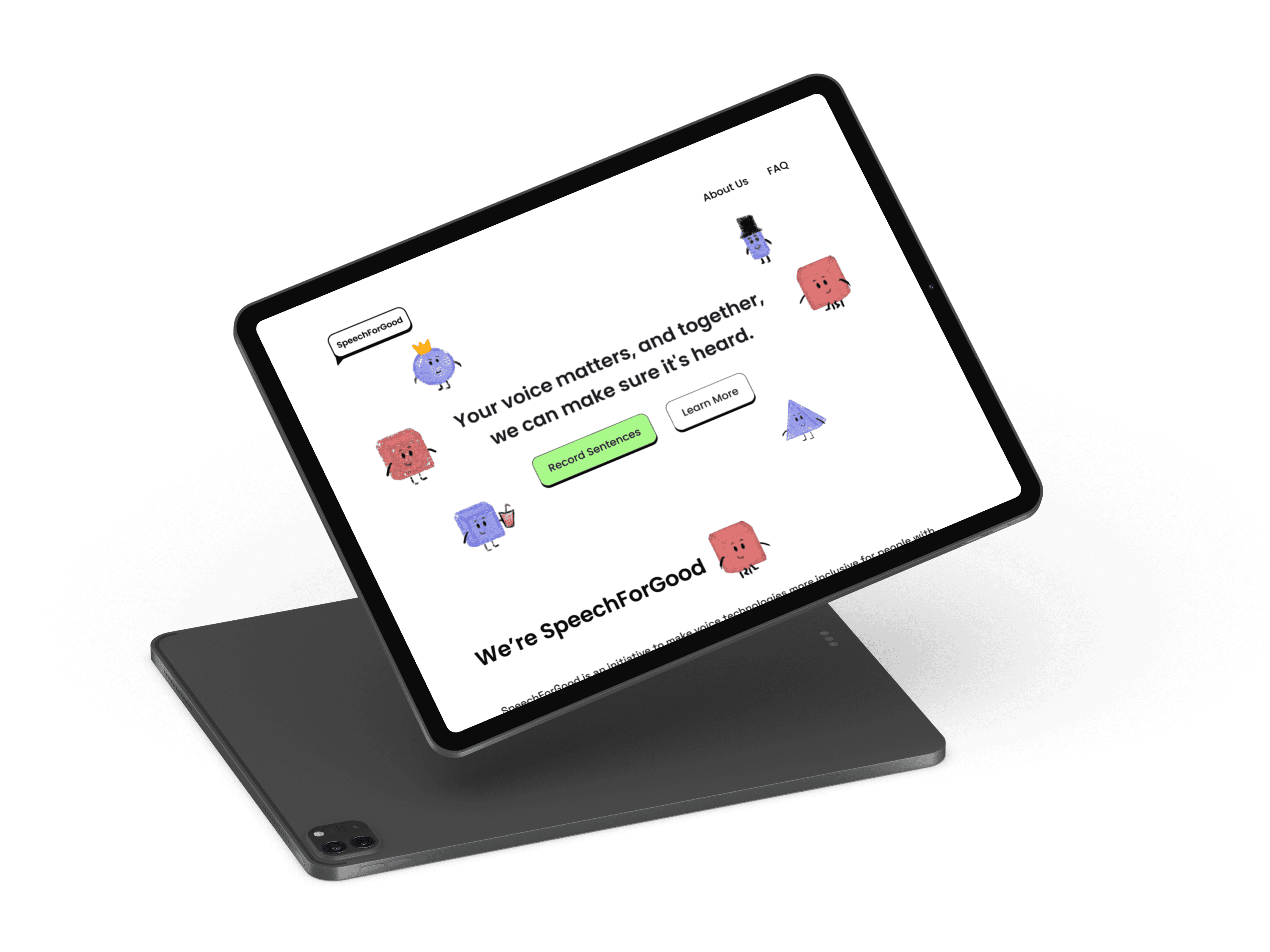

DESIGNS

Home Page: Emphasizing Purpose and Community

Being the first page users see, we accurately conveyed important information surrounding the project, and its purpose. Community building is also a vital aspect of this domain, given the general reluctance of PWS to contribute samples.

Before the recording process: Building transparency

We made sure to let users know before they start that they have full control over their recording files. We avoided account creation as it could’ve been a barrier.

Recording: Covering more phonetic variations

Different words and sentence structures bring out different combinations of sounds, which helps train more accurate speech recognition or analysis models. For each recording contributed, the user earns XP.

Additionally, providing the option to record in different languages helps create a dataset that can be language inclusive. Our literature indicates a research scope constrained to one language (English), but hopes for more efficient expansion to other languages and disorders (MacDonald et al., 2021).

Playback: Comfort and Control

Replaying and re-recording options allow users to take their time and make adjustments without feeling like they contributed poorly to training models.

Recording: Covering more phonetic variations

Having a direct way to access and manage their data reinforces that user consent and comfort are a priority.

For gamification, we chose to focus on a single element, XP, to encourage user participation and drive engagement. XP is granted upon submission of recorded audio. It can be spent to customize a user's personal creature.

DESIGNS

Home Page: Emphasizing Purpose and Community

Being the first page users see, we accurately conveyed important information surrounding the project, and its purpose. Community building is also a vital aspect of this domain, given the general reluctance of PWS to contribute samples.

Before the recording process: Building transparency

We made sure to let users know before they start that they have full control over their recording files. We avoided account creation as it could’ve been a barrier.

Recording: Covering more phonetic variations

Different words and sentence structures bring out different combinations of sounds, which helps train more accurate speech recognition or analysis models. For each recording contributed, the user earns XP.

Additionally, providing the option to record in different languages helps create a dataset that can be language inclusive. Our literature indicates a research scope constrained to one language (English), but hopes for more efficient expansion to other languages and disorders (MacDonald et al., 2021).

Playback: Comfort and Control

Replaying and re-recording options allow users to take their time and make adjustments without feeling like they contributed poorly to training models.

Recording: Covering more phonetic variations

Having a direct way to access and manage their data reinforces that user consent and comfort are a priority.

For gamification, we chose to focus on a single element, XP, to encourage user participation and drive engagement. XP is granted upon submission of recorded audio. It can be spent to customize a user's personal creature.

DESIGNS

Home Page: Emphasizing Purpose and Community

Being the first page users see, we accurately conveyed important information surrounding the project, and its purpose. Community building is also a vital aspect of this domain, given the general reluctance of PWS to contribute samples.

Before the recording process: Building transparency

We made sure to let users know before they start that they have full control over their recording files. We avoided account creation as it could’ve been a barrier.

Recording: Covering more phonetic variations

Different words and sentence structures bring out different combinations of sounds, which helps train more accurate speech recognition or analysis models. For each recording contributed, the user earns XP.

Additionally, providing the option to record in different languages helps create a dataset that can be language inclusive. Our literature indicates a research scope constrained to one language (English), but hopes for more efficient expansion to other languages and disorders (MacDonald et al., 2021).

Playback: Comfort and Control

Replaying and re-recording options allow users to take their time and make adjustments without feeling like they contributed poorly to training models.

Recording: Covering more phonetic variations

Having a direct way to access and manage their data reinforces that user consent and comfort are a priority.

For gamification, we chose to focus on a single element, XP, to encourage user participation and drive engagement. XP is granted upon submission of recorded audio. It can be spent to customize a user's personal creature.

CONCLUSION

After 3 days, we presented to our peers and a panel of judges and won first place.

Reflecting on the experience, creating Speech For Good pushed our team to prioritize empathy in every design decision. Technology isn't neutral, and the systems we build sometimes exclude entire groups. No one should face friction simply because they don't fit within the "norm," yet this happens constantly with AI products. We must actively create space for diverse voices in the design process, ensuring the technologies we build serve everyone equitably.

Overall, this project left me with a deeper understanding of ethical AI, the nuances of inclusive design, and the responsibility we carry as designers to create with, not just for, our users. By centering transparency, consent, and community benefits, my team and I reimagined the data collection experience as one rooted in empowerment.

CONCLUSION

After 3 days, we presented to our peers and a panel of judges and won first place.

Reflecting on the experience, creating Speech For Good pushed our team to prioritize empathy in every design decision. Technology isn't neutral, and the systems we build sometimes exclude entire groups. No one should face friction simply because they don't fit within the "norm," yet this happens constantly with AI products. We must actively create space for diverse voices in the design process, ensuring the technologies we build serve everyone equitably.

Overall, this project left me with a deeper understanding of ethical AI, the nuances of inclusive design, and the responsibility we carry as designers to create with, not just for, our users. By centering transparency, consent, and community benefits, my team and I reimagined the data collection experience as one rooted in empowerment.

CONCLUSION

After 3 days, we presented to our peers and a panel of judges and won first place.

Reflecting on the experience, creating Speech For Good pushed our team to prioritize empathy in every design decision. Technology isn't neutral, and the systems we build sometimes exclude entire groups. No one should face friction simply because they don't fit within the "norm," yet this happens constantly with AI products. We must actively create space for diverse voices in the design process, ensuring the technologies we build serve everyone equitably.

Overall, this project left me with a deeper understanding of ethical AI, the nuances of inclusive design, and the responsibility we carry as designers to create with, not just for, our users. By centering transparency, consent, and community benefits, my team and I reimagined the data collection experience as one rooted in empowerment.